Forecasting the Impact of AI on Credit Risk Management in Consumer Lending: Part 1 Draft

Background

Recently, I have been exploring AI 2027 a detailed month-by-month forecast of artificial intelligence advancements through 2027. This resource offers a comprehensive framework outlining how AI is expected to evolve over the next three years. Inspired by this approach, I aim to conduct a similar thought experiment focused specifically on the impact of AI within my current field—Credit Risk Management in consumer lending. Additionally, I find it engaging and insightful to systematically and methodically forecast future developments. My primary contribution to this exercise is my extensive industry expertise and experience.

Abstration on Credit Risk Management

During interviews, I have often been asked to describe the day-to-day responsibilities of a credit risk manager. Reflecting on this question periodically has been rewarding, as the need to provide concise answers helps me distill and better understand the key components influencing a credit risk manager's outcomes. In this context, I aim to present an abstraction that strikes a balance—not overly generic, such as describing the manager merely as an agent maximizing company objectives, nor excessively detailed, such as delving into the fine-tuning of specific policies. The chosen level of abstraction reflects my personal judgment and is, in my view, sufficient for predicting the future impact of AI.

A Picture of A Generic Agent Alex

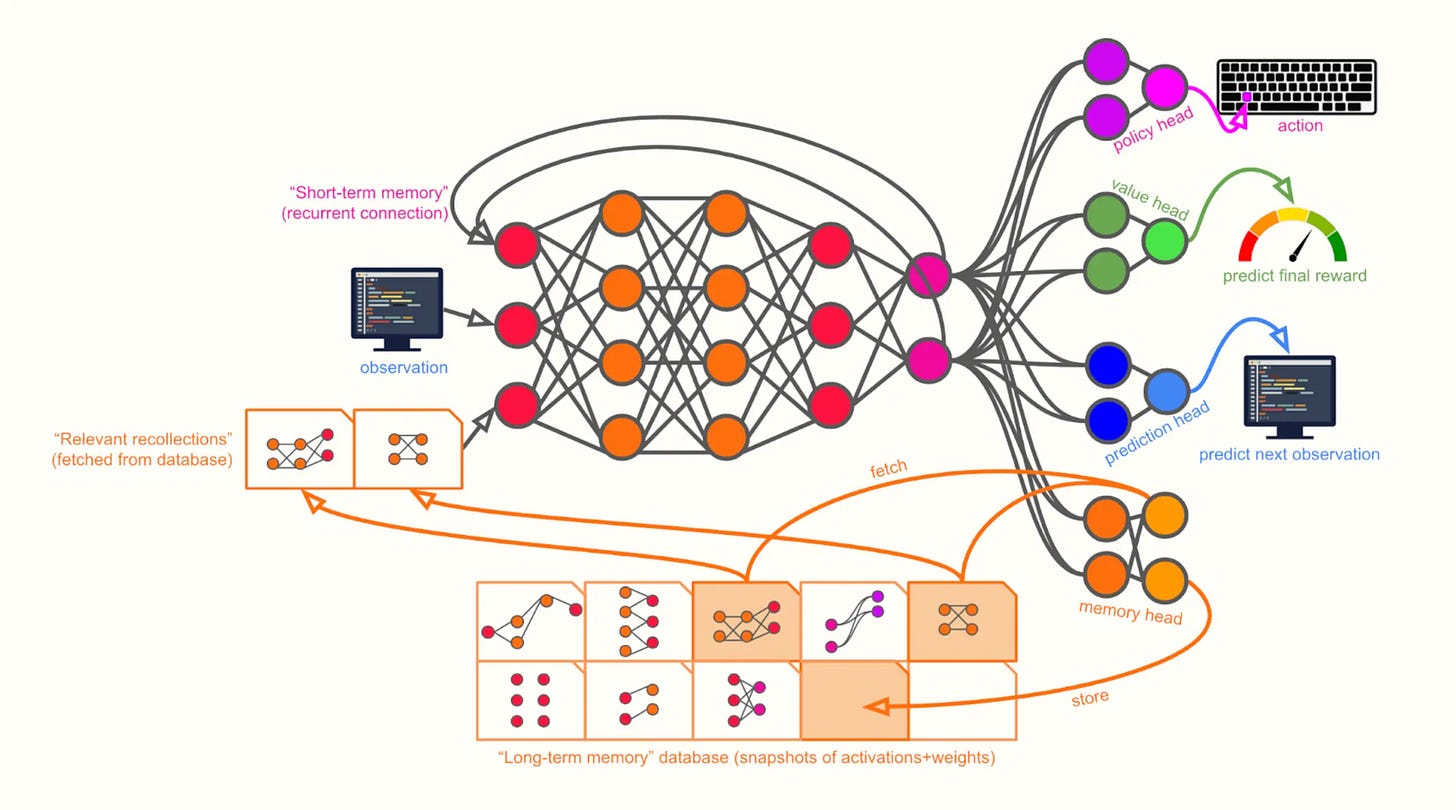

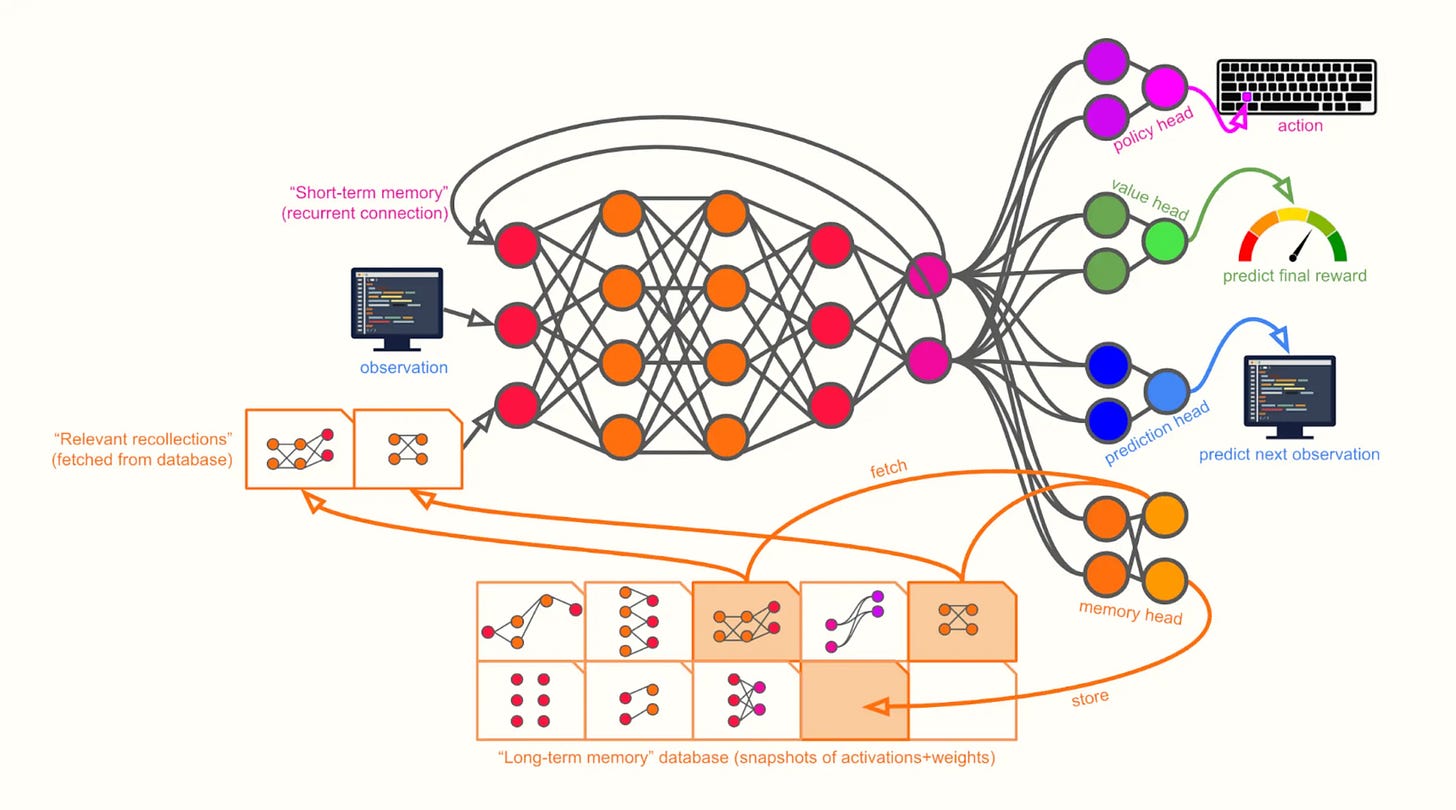

The agent capability imagined is based on the article mentioned in AI 2027 and originally posted on LessWrong.

1 · Major building blocks

Block What it is Key sub-parts / tricks Why it matters Sequence core

(RNN / Transformer / hybrid) Main neural network that digests time-series data • Recurrent hidden state (short-term memory)

• Optional attention over a sliding window of the last K observations Lets Alex reason over lengthy code–interaction traces instead of single frames Multi-task heads Separate “heads” branching off the core Policy head – emits action

Value head – predicts final episode return

Prediction head – predicts next observation

Memory head – read / write keys for long-term memory Auxiliary losses (value & prediction) speed learning; memory head is the bridge to long-term store Long-term-memory DB Key-value store (on fast local disk or RAM) that persists across millions of steps within an episode • Stores compressed snapshots of internal activations / weights that Alex decides are useful (“experiences”)

• Content-based retrieval (e.g., nearest-neighbour in embedding space) Overcomes the vanishing-gradient / context-window limits of the core network External computer The keyboard-and-screen interface that forms the RL environment • Observation = rendered screen (pixels, DOM, or latent embedding)

• Action = low-level keystroke / mouse event Gives Alex the same “motor” & “sensory” surface a human programmer has

2 · Per-timestep control-flow (one episode step)

Step Input(s) Processing Output(s) 1. Sense Screenshot / DOM diff from external computer – Latent “observation” vector 2. Think Observation + previous hidden state + fetched memories Sequence core updates hidden state New latent state 3. Decide Latent state Heads fire:

• Policy head → action logits

• Value head → reward estimate

• Prediction head → next-obs logits

• Memory head → (store_vec, fetch_query) Keystroke; scalar value; next-obs distribution; memory read/write commands 4. Act Keystroke Env executes action New screen for next step 5. Remember store_vec, hidden state snapshot Written into memory DB – 6. Recall fetch_query Retrieved embeddings appended to next step’s input –

Specialized Agent in Credit Risk Management

The generic agent described above offers a valuable mental model for understanding the essential components of an agent, closely reflecting key characteristics of human agents. Nevertheless, we observe significant variability in performance among individuals. Within the broader population, only a small subset of people become credit risk managers—some lack interest in the role, while others may not possess the necessary capabilities[1] (innate) or skills (learned). Even within credit risk management teams, performance levels vary considerably. Given that the architecture is general, what factors contribute to these differences?

Based on my experience, I believe the following factors contribute to the differences and those qualities are what as a hiring manager would look for:

Logic and Mathematical Reasoning: Since risk is quantified numerically, strong numerical literacy and the ability to construct models that clearly represent risk and reward are essential. While advanced mathematical expertise is not mandatory, the role demands robust mathematical thinking, including skills in modeling, simulation, optimization, and experimentation (grounded in probability and statistical theory).

Data Analysis: The ability to derive actionable insights from data is essential. This skill encompasses several sub-components, which I have detailed in my Credit Risk Management training material, outlining my approach to effective data analysis.

Contextual Awareness: Strong contextual awareness is essential. Analysts must thoroughly understand the problem at hand, recognize internal and external constraints (such as organizational policies and regulatory requirements), and be mindful of historical precedents, as repeating unnecessary actions can be particularly costly in finance.

Strong judgment: Given any problem, there are infinitely many hypotheses. The ability to effectively assess the likelihood of each hypothesis before initiating detailed analysis is crucial. This skill relies on robust common sense (a well-informed prior probability distribution) and the capacity to reason from first principles (a clear causal model). Strong common sense can be cultivated through experience and by learning from others. Analysts should consciously strive to interpret and understand the world around them to enhance their intuitive judgment. Reasoning from first principles involves systematically tracing observations back to their underlying causes using established causal frameworks.

Coding proficiency: The ability to translate experiments (hypothesis testing) into code is essential, as manual data handling is not scalable. Python and SQL are typically the preferred programming languages. However, language choice becomes less critical for LLM-based agents, which can currently generate code across various languages, although they still encounter limitations when managing large-scale projects. Unlike extensive software development projects, data analysis tasks generally involve shorter and more concise code.

Strong presentation and persuasion skills: Effective communication is essential not only when presenting to human managers but also when interacting with AI agents. It is unlikely that a single AI agent will handle all aspects of credit risk management independently; roles such as maker and checker may still persist among AI systems. Therefore, clearly conveying analytical results and insights to both human and AI counterparts is critical. However, quantifying the effectiveness of communication between AI agents remains an open challenge.

Tool Use: The ability to effectively utilize tools appears to be important. I use the term "appears" because it remains uncertain whether an AI agent will need to interact with traditional human tools such as Excel or navigate a WIMP (Windows • Icons • Menus • Pointer) interface to deploy policies or conduct experiments. If the system is entirely custom-built, these tools might become unnecessary. Nevertheless, I anticipate that proficiency with certain human-oriented tools will be valuable, particularly during initial adoption phases.

The courage to make decisions: Humans generally exhibit risk-averse behavior. After thorough data analysis and consultation with stakeholders, a credit risk manager must ultimately make and execute decisions. Not everyone naturally possesses the courage required for decisive action. Similar to poker players who hesitate to place bets even when the odds favor them, some individuals struggle to commit despite favorable conditions. It remains uncertain whether AI agents will demonstrate similar hesitation or if their decision-making will be purely logical. However, I anticipate that the training environment and accumulated experiences will influence an AI agent's decision-making behavior.

Integrity and Alignment: Finance is a heavily regulated industry, requiring credit risk managers to consistently make decisions that comply with existing rules and regulations. Beyond mere compliance, managers must also interpret the underlying intent of these regulations to proactively adapt to potential future changes. Consequently, an effective AI agent must not only adhere strictly to current regulatory frameworks but also possess the capability to reason about the broader purpose and intent behind these rules.

Prediction Head: The capability to accurately forecast future outcomes is crucial. Human agents typically rely on machine learning models to estimate the probability of default[2]. In contrast, AI agents may inherently possess predictive capabilities—similar to how large language models predict subsequent tokens—or they might adopt predictive tools analogous to those used by humans.

A useful analogy is to view innate ability as analogous to an agent's architecture and pretraining, whereas learned skills correspond to fine-tuning or reinforcement learning processes. ↩︎

Predictive models may be developed by a dedicated team of data scientists rather than credit risk managers. Nevertheless, credit risk managers typically remain the ultimate decision-makers, delegating the predictive modeling tasks to data scientists. ↩︎