Reflections from Hackathon@Sea 2025: The Gap Between AI Agent Hype and Reality

This past weekend, I participated in Hackathon@Sea 2025, an internal event hosted by my company. Unsurprisingly, the theme was the "AI Agent," a topic surrounded by immense hype and speculation. The experience provided a clear snapshot of the current landscape of AI development, crystallizing my thoughts on its potential and its present-day limitations.

The Paradox: AI Lowers the Barrier to Creation, But Widens the Gap to Production

AI has fundamentally lowered the barrier to creating software. With AI-powered IDEs like GitHub Copilot and the power of modern LLMs such as Claude and ChatGPT, developers can build traditional, rule-based applications at a pace previously unimaginable.

Simultaneously, a new paradigm of application is emerging, one where the Large Language Model (LLM) itself is the core. Unlike rule-based systems, these applications are probabilistic and adaptive. A chatbot or an AI customer service agent, for instance, will rarely give the exact same answer to the same question twice.

While this makes creating impressive demos easier than ever, it introduces a significant gap on the path to production. For traditional applications, current AI tools still struggle with the context length required for large-scale projects and cannot yet manage the full development lifecycle of coding, testing, debugging, and maintenance. I foresee this being a solvable problem in the near future, with AI agents eventually handling end-to-end development.

The second scenario is more concerning. The power of a pre-trained LLM makes it incredibly simple to build a proof-of-concept. A custom prompt can instantly create a chatbot that looks exciting and promising. However, the crucial question remains: are you confident enough to deploy this prototype for real-world use?

In the hackathon, our team built a prototype to explain risk fluctuations by consolidating information from Google Sheets, dashboards, and Google searches for macroeconomic events. We "succeeded" on our first attempt—the agent produced an explanation. But a closer look revealed the output was superficial. A huge gap remained between what it produced and what would be genuinely useful.

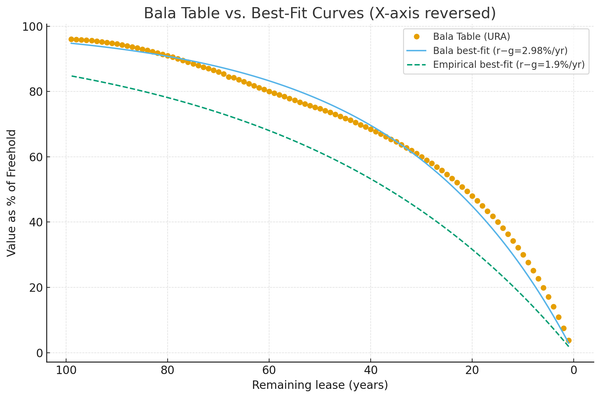

Observation 1: Today's AI Agents Are Architecturally Similar

A striking observation from the competition was the architectural homogeneity across projects. The dominant pattern involved dedicating specific tasks to different agents and then consolidating their outputs into a final result. This widespread approach made me question: what are the real differentiators for creating a valuable AI system?

While I don't have a definitive answer, my conviction is that the focus must be on three areas:

- Start with a real, well-defined problem.

- Pay meticulous attention to the technical details of implementation beyond the initial prompt.

- Create a data flywheel, allowing the system to improve over time as it processes more information.

I also noticed a critical weakness in current agentic systems: the lack of robust short-term and long-term memory. This isn't just about connecting to a knowledge base; it requires a sophisticated model to determine what to remember, how to recall it, and when to apply it.

Observation 2: Impressive in Theory, Unreliable in Practice

The ease of creating prototypes fuels the hype that AI agents will soon replace a vast range of human work. However, this overlooks the immense challenge of ensuring reliability and usability.

One of the judges asked our group what was needed to close the gap and have our prototype completely replace a human analyst. My reply was data and context. Current LLMs lack access to the nuanced thought processes of a human expert. Furthermore, in specialized domains like risk management, the feedback loops (or "rewards") that an AI can learn from are sparse and infrequent. This, I believe, is the root cause of why many AI agents are not yet practical for mission-critical tasks. The situation will undoubtedly change, perhaps in the next 2-3 years, but the challenge today is significant.

Observation 3: The Persistent Challenge of Communicating Intent

Prompt engineering remains a critical skill. To get an LLM to perform a task as intended, one must often provide lengthy, explicit context and instructions. This contrasts sharply with human communication, where we infer intent and requirements with far fewer guidelines.

There are a few reasons for this disparity. First, most LLMs are not trained to proactively clarify a user's intent—they are designed to respond. Second, they often lack the context of past interactions. A memory of previous conversations would enable an LLM to better understand a user's goals and refine its performance over time.

What Differentiates an AI Agent from Automation?

Many of today's AI agents are built on workflow frameworks like LangChain, which can make them feel like sophisticated automation tools executing a series of predetermined steps. This raises a fundamental question: what is the true difference between an AI agent and traditional automation?

The distinction lies in their core nature. Automation follows a deterministic set of rules: if X happens, do Y. It is best suited for tasks that can be clearly mapped out. An AI agent, however, is fundamentally probabilistic. It operates not on explicit rules but on abstract problem-solving frameworks.

The guiding principle should be: if you can describe a task with "if-else" logic, use automation. If the task requires reasoning, adaptation, and dealing with ambiguity, then an agentic system is the more powerful approach.